I'm a research assistant at Haptics and Virtual Reality Lab and a MS-PHD combined student at Kyung Hee University in South Korea. I'm advised by Professor Seokhee Jeon and work on data-driven modeling and rendering of haptic properties to generate realistic haptic feedback in VR environments.

My research primarily focuses on modeling and rendering of haptic textures using both online and offline approaches. I specialize in handling complex time series data and applying signal processing techniques. I have also utilized these skills in the context of teleoperation systems, where real-time and accurate rendering of haptic textures is crucial for remote operators.

Additionally, I explore the development of novel encountered type haptic devices such as haptic drones and wearable haptic devices in VR and AR applications.

News

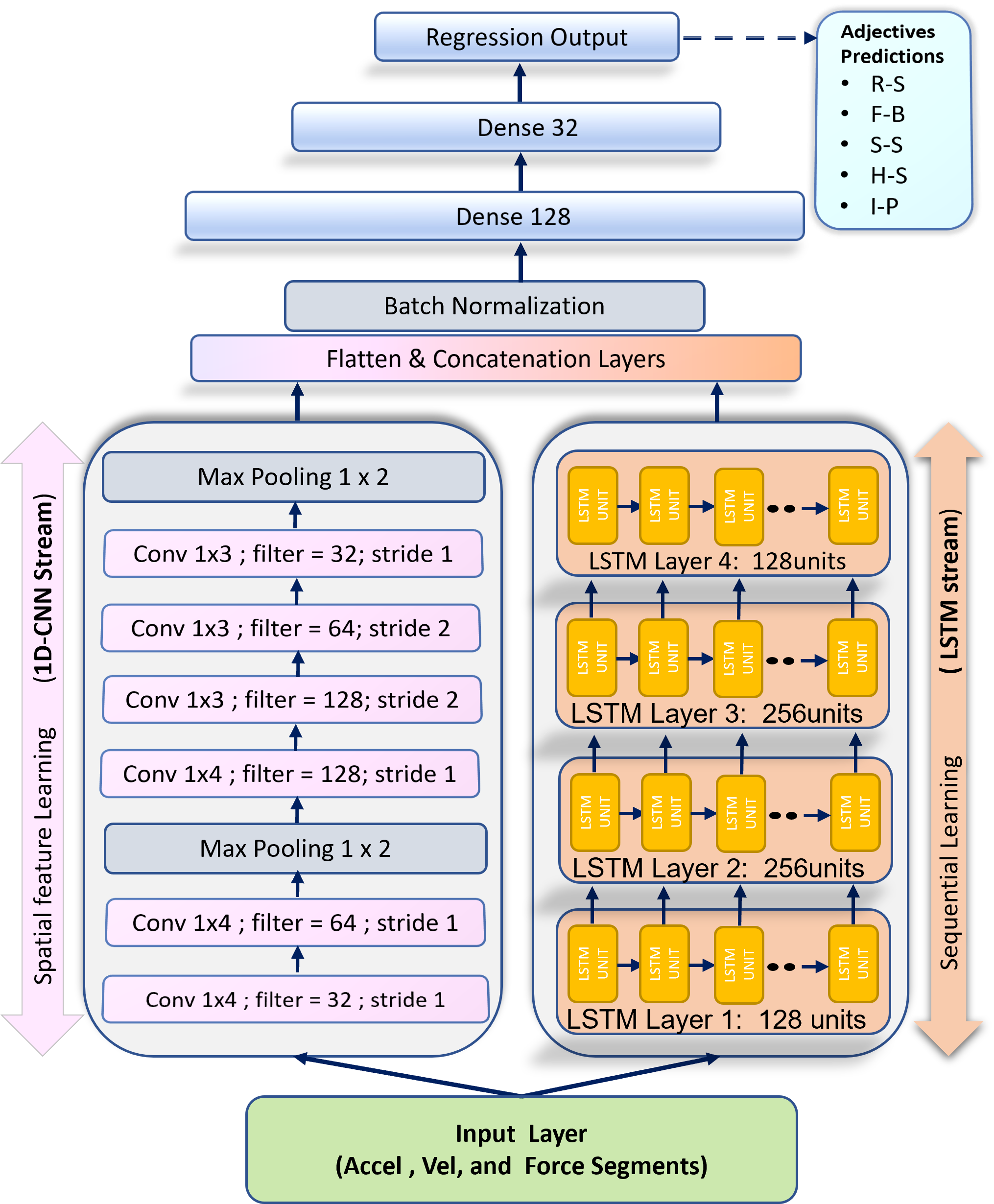

- One paper got accepted at VRST-2023 conference on "Predicting Perceptual Haptic Attributes of Textured Surface from Tactile Data Based on Deep CNN-LSTM Network" (Sept 2023).

- One paper got accepted at KCC-2023 conference on "Design and Evaluation of Lightweight Deep Learning Models for Synthesizing Haptic Surface Textures" (June 2023).

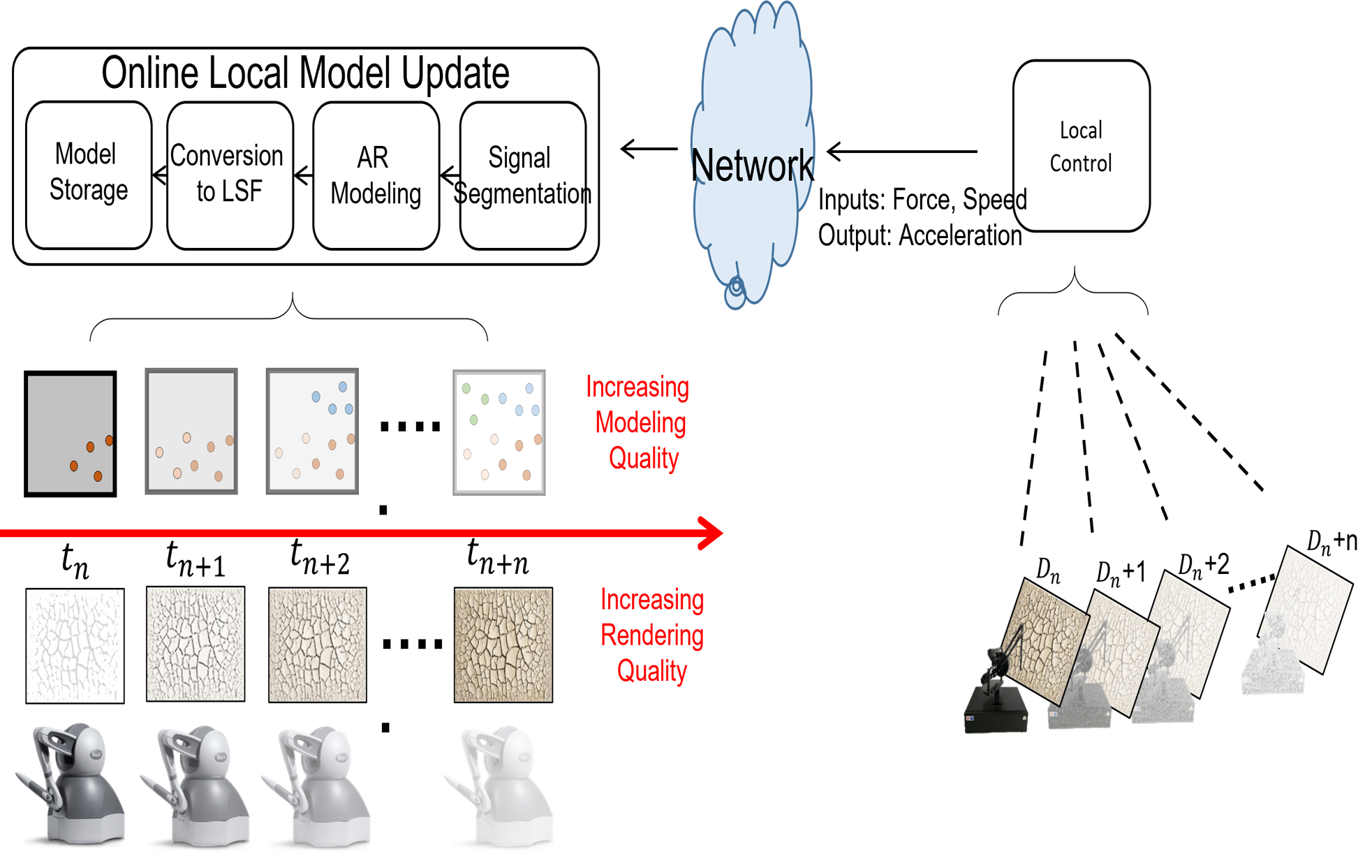

- I will be presenting our paper on Model Mediated Teleoperation for online Texture modeling and rendering at ICRA-2023 on 1st June, 2023 at Excel London.

- One paper got accepted at UR-2023 conference on "Drone Haptics for 3DOF Force Feedback" (April 2023).

- One paper got accepted at ICRA-2023 conference titled "Model Mediated Teleoperation for real time haptic texture modeling and rendering" (Feb 2023).

Selected Publications

You can also find the full list of my publications here

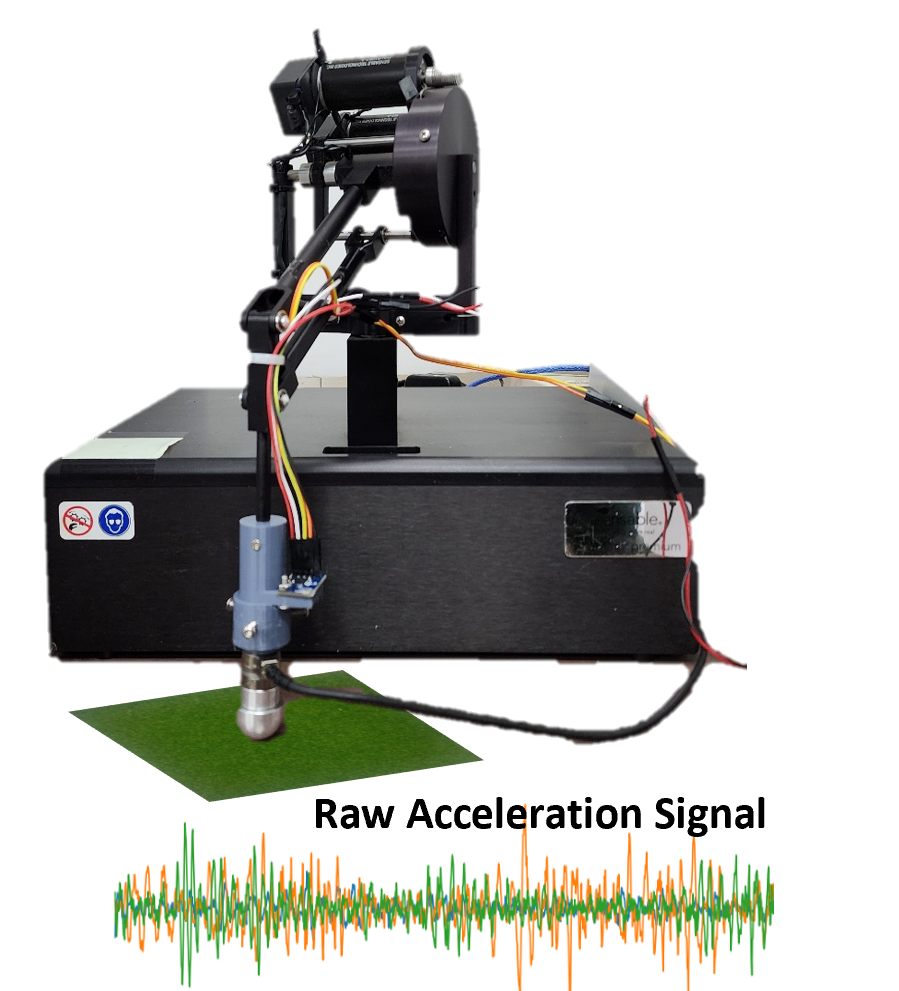

| Predicting Perceptual Haptic Attributes of Textured Surface from Tactile Data Based on Deep CNN-LSTM Network(VRST 2023)This paper presents a method to predict human-perceived haptic attributes from tactile signals (acceleration) when a surface is stroked. Using data from 25 texture samples, a five-dimensional haptic space is defined through human feedback, while a physical signal space is created from tool-based interactions. A CNN-LSTM network maps between these spaces. The resulting algorithm, which translates acceleration data to haptic attributes, demonstrated superior performance on unseen textures compared to other models. |

| Model-Mediated Teleoperation for Remote Haptic Texture Sharing: Initial Study of Online Texture Modeling and Rendering(ICRA 2023 )This paper presents the first model-mediated teleoperation (MMT) framework capable of sharing surface haptic texture. It enables the collection of physical signals on the follower side, which are used to build and update a local texture simulation model on the leader side. This approach provides real-time, stable, and accurate feedback of texture. The paper includes an implemented proof-of-concept system that showcases the potential of this approach for remote texture sharing. |

| DroneHaptics - Encountered Type Haptic Interface Using Dome-Shaped Drone for 3-DoF Force Feedback(UR 2023)This paper introduces a dome-shaped haptic drone with a hemispherical cage made of aluminum mesh. The cage enables controllable 3D force feedback, improving usability and user safety. Experimental measurements and mathematical formulations establish an accurate force-thrust relationship. The system's force rendering accuracy was evaluated, achieving a low error rate of less than 8.6%, ensuring perceptually accurate force feedback. |

| Surface Texture Classification Based on Transformer Network (HCI Korea, 2023)In this study we developed a new transformer-based deep learning model for surface texture classification from haptic data (i.e., interaction speed, applied force and produced vibration signals). This approach leverages the self-attention process to learn the complex patterns and dynamics of time-series data. To the best of our knowledge this is the first time that the transformer or its variants are used for surface texture classification using tactile information. As a proof of concept, we collected data for 9 different textures and the evaluation experiments showed that the model achieved state-of-the-art classification accuracy. |

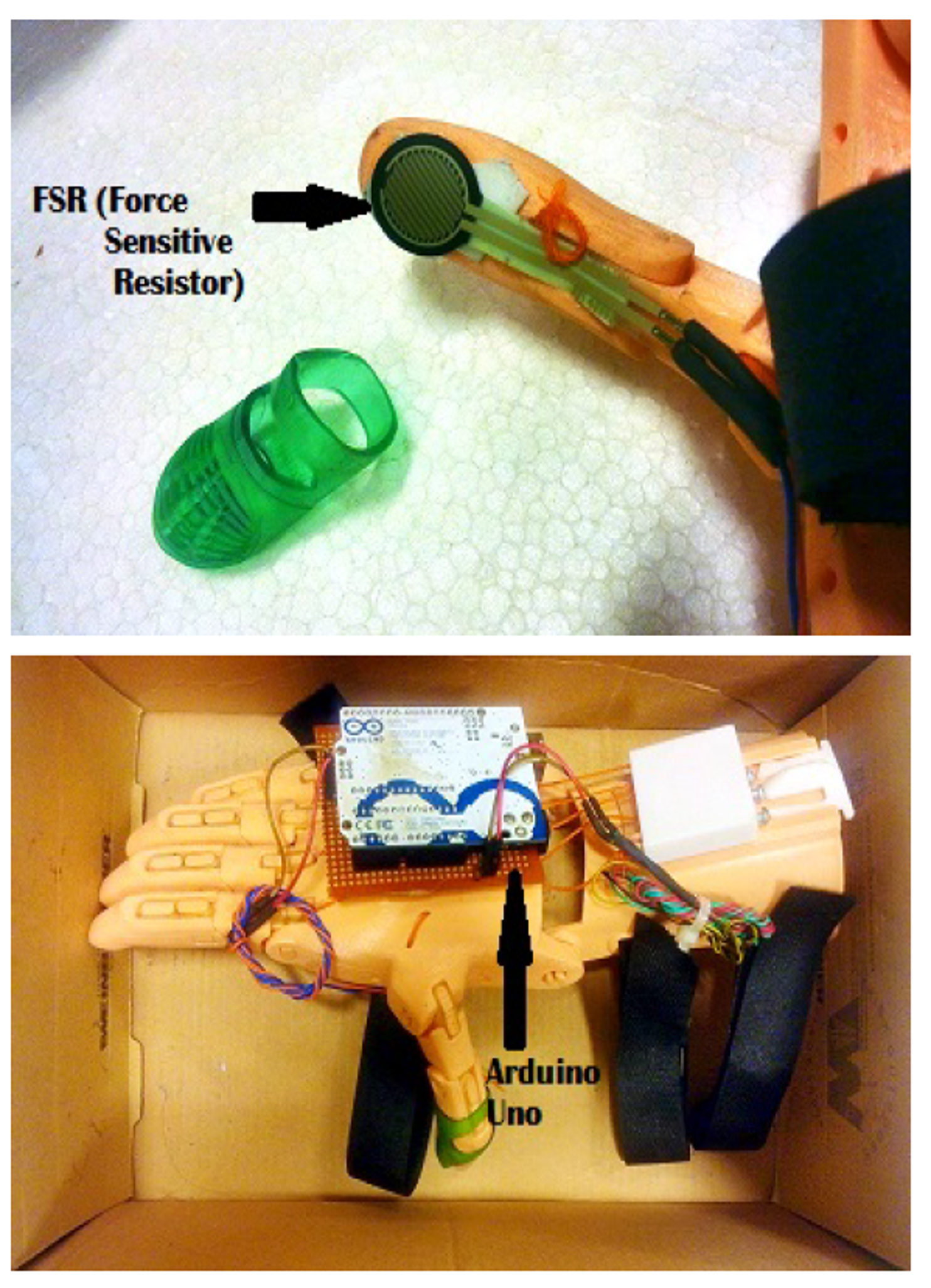

| Vibrotactile Stimulation for 3D Printed Prosthetic Hand (ICRAI, 2016)In this study, we address the significant limitation posed by the absence of haptic feedback in prosthetics. Emphasizing the potential of closed-loop prosthetics, our objective is to provide amputees with both exteroceptive (environmental pressure) and proprioceptive (awareness of joint positions) feedback through specialized sensory systems. To this end, we incorporated coin-shaped vibration motors and 0.5-inch circular FSRs to deliver nuanced feedback from the fingertip. For an economical solution, a 3D printed hand was adopted. Our evaluation involved six healthy subjects and one amputee, with the results affirming the efficacy of our approach. |